Stefan Pohl Computer ChessHome of famous UHO openings and EAS RatinglistLatest Website-News (2026/03/05): Testrun of Reckless 0.9.0 finished: +13 Celo to Reckless 260108. +13 Celo in only 2 months - very impressive on this extremly high level of strength! Reckless is now only -38 Celo behind Stockfish 18 - incredible.

My gift to the community - a much better cutechessGUI. Made by Patrick Leonhardt (PlentyChess), payed for the work by me. Learn more about new cutechess on talkchess and download it right here Please mention, this is an early release, bugs are still possible - please report them on talkchess or contact me.

Ceres Binary here, Ceres Installation Guide here, Ceres Nets here

Dont forget to take a look at my EAS-Ratinglist (The world's first engine-ratinglist not measuring strength of engines, but engines's style of play).

Stay tuned. UHO-Top15 Engines Ratinglist (+ regular testing of Stockfish Dev-versions)

Playing conditions:

The 15 strongest engines and the latest StockfishDev version are playing 1000 games vs. each opponent: 15000 games per engine are played. A RoundRobin Tournament with 120000 games overall !

Hardware: AMD Ryzen 9 7945HX 16-core (32 threads) notebook with 64GB RAM (since 2024/10/08), 2 identical machines. Windows 11 64bit Speed: (singlethread, TurboBoost-mode switched off, chess starting position) Stockfish 17: 600 kn/s (when 26 games are running simultaneously) Hash: 512MB per engine GUI: Cutechess-cli (GUI ends game, when a 5-piece endgame is on the board, all other games are played until mate or draw by chess-rules (3fold, 50-moves, stalemate)) Tablebases: None for engines, 5 Syzygy for cutechess-cli Openings: My UHO_2022_6mvs_+120_+129 openings are used (first 500 lines) (UHO 2022 openings are part of my Anti Draw Openings download-package). Ponder, Large Memory Pages & learning: Off Thinking time: 3min+1sec per game/engine. One testrun (15000 games) takes around 80-85 hours (=3.5 days) The version-numbers of the Stockfish engines are the date of the latest patch, which was included in the Stockfish sourcecode, not the release-date of the engine-file, written backwards (year,month,day))(example: 200807 = August, 7, 2020). The used SF compile is the AVX512-compile, which is the fastest on my AMD Ryzen CPU. SF binaries are taken from https://github.com/official-stockfish/Stockfish/releases (except the official SF-release versions, which are taken form the official Stockfish website). Read the explanation, why I chose my testing-conditions, like I did here

Latest update: 2026/03/05: Reckless 0.9.0 (+13 Celo to Reckless 260108)

(Ordo-calculation fixed to Stockfish 17.1 = 3854 Celo. Mention, there are no human or "realistic" Elo numbers in enginechess, since the engines reached superhuman strength, so I decided to use "Celo" (=Computer Elo) instead)

See the tournament cross-table as a picture (done by Fritz20 GUI) here See the individual statistics of engine-results here See the Engines Aggressiveness Score Ratinglist here See the Gamepair-statistics of the UHO-Top15 Ratinglist here See the full UHO Ratinglist (with EAS-Ratinglist): here Download the current gamebase, the full-list gamebase and the archive here Download all interesting wins (filtered by EAS-Tool) from the full UHO Ratinglist here See the old SPCC-Ratinglist (full list) and EAS-List from 2020 until 2023/08/28: here

(best Stockfish Celo: Stockfish 260218: 3876 Celo, latest full release (Stockfish 18) has 3873 Celo) Program Celo + - Games Score Av.Op. Draws 1 Stockfish 260218 a512 : 3877 4 4 15000 67.5% 3745 49.2%

Games : 120000 (finished) Below the UHO-Top15 Ratinglist, recalculated with Gamepairs (using my Gamepair Rescoring Tool V1.5), Ordo-calculation fixed to Stockfish 17.1 = 3854 Celo, realizing Vondele's (Stockfish Maintainer) idea: "Thinking uniquely in game pairs makes sense with the biased openings used these days. While pentanomial makes sense it is a bit complicated so we could simplify and score game pairs only (not games) as W-L-D (a traditional score of 2-0, or 1.5-0.5 is just a W)." Mention, the errorbar here is +/-5.5 Celo (=11 overall Error), compared to +/-4 Celo in the classical ratinglist above (=1.375 wider errorbar here). But the result-gaps are (at least) 2x up to 2.5x bigger (in average), calculating with gamepairs. So, the statistical reliability of the results is much better in the Gamepair-ratinglist, compared to the classical ratinglist... CFS means Chance For Superiority in percent, calculated by ORDO. See the head-to-head gamepair-statistics of the UHO-Top15 Ratinglist here # PLAYER : Celo Error Pairs W D L (%) CFS(%)

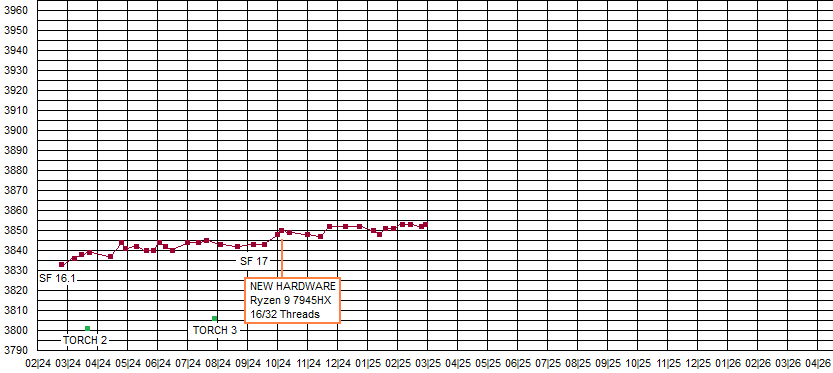

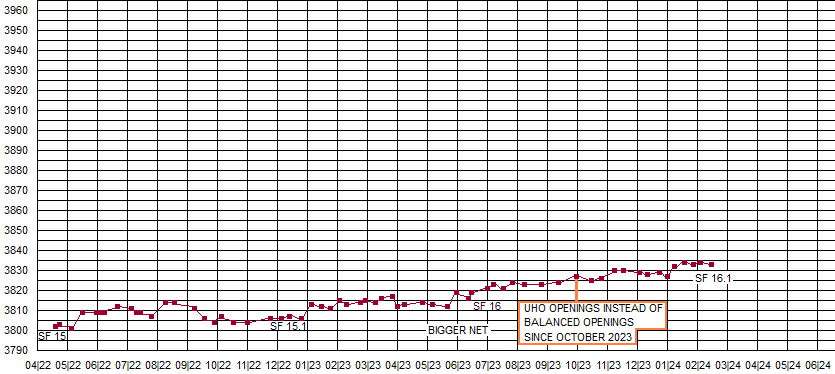

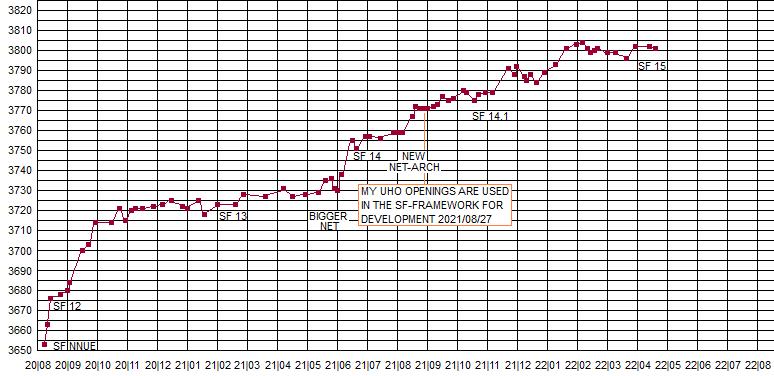

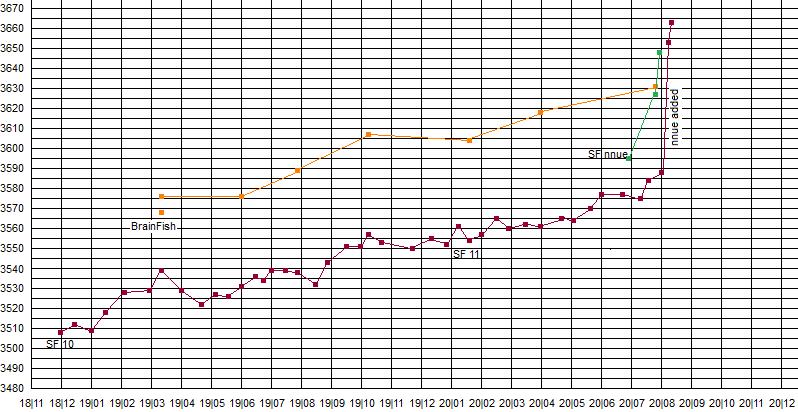

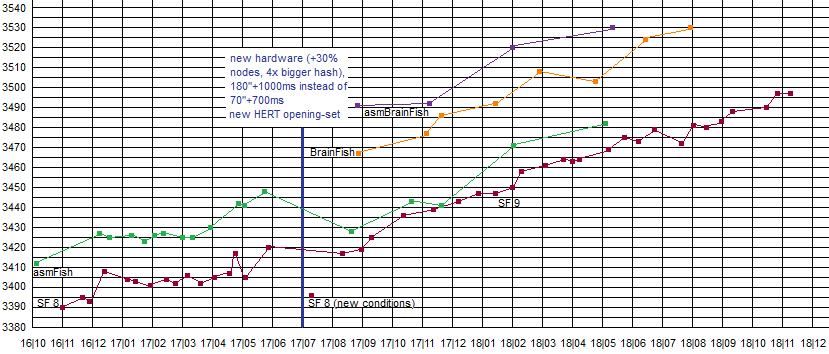

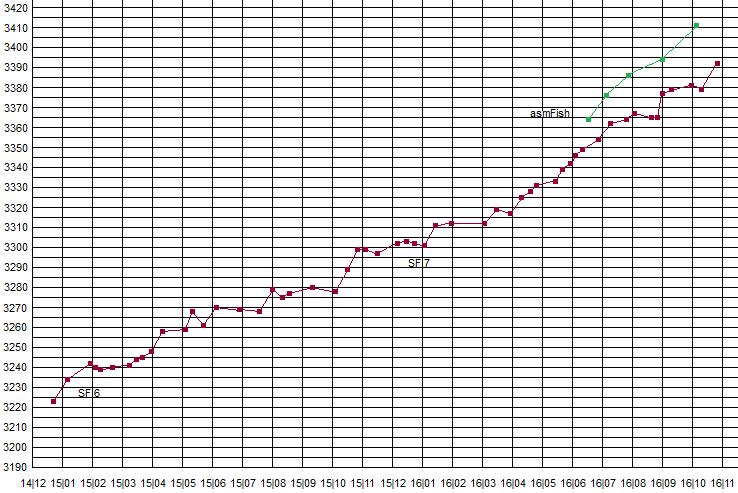

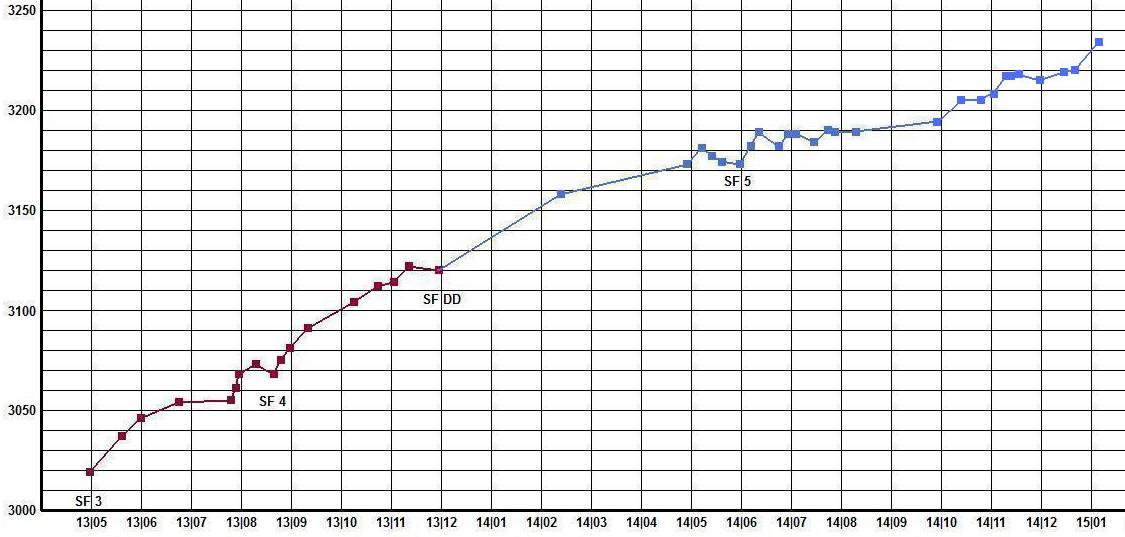

------------------------------------------------------------------- Aborted testruns, because the tested engine was too weak to enter the UHO-Top15 Ratinglist: Heimdall 1.4.1, Renegade 1.2.0, Igel 3.6.0, Minic 3.41, Booot 7.3, Black Marlin 9.0, Equisetum 1.0, Clarity 8 Below you find a diagram of the progress of Stockfish in my tests since February 2026. And below that diagram, the older diagrams.

You can save the diagrams on your PC with mouseclick (right button) and then choose "save image"...

Sie sind Besucher Nr. |